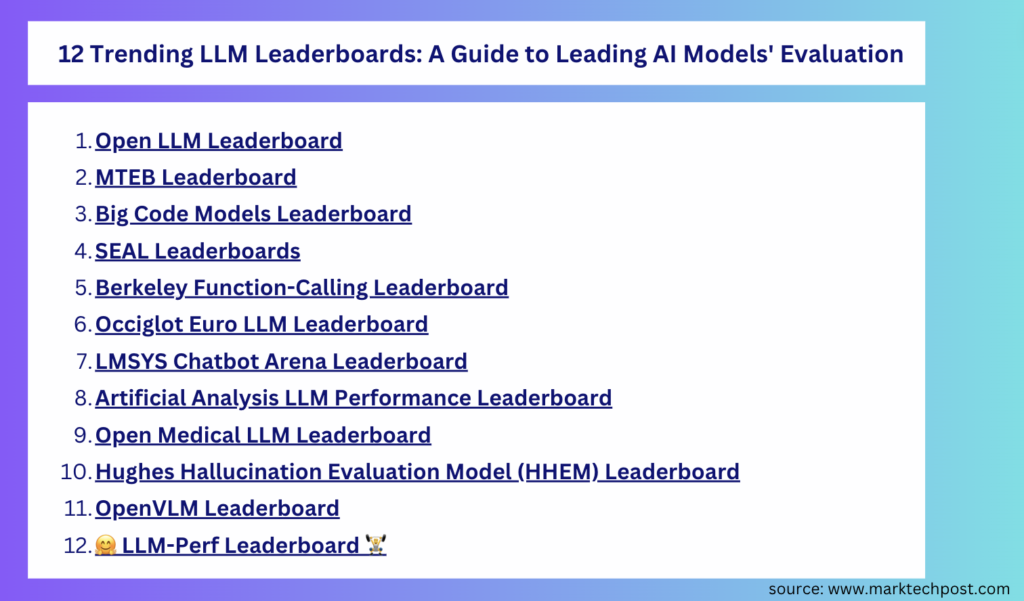

Here is the list of the top 12 trending LLM leaderboards: A guide to evaluating leading AI models

Open LLM Leaderboard

With so many LLMs and chatbots emerging every week, it can be hard to discern true progress from the hype. The Open LLM Leaderboard addresses this challenge by using the Eleuther AI-Language Model Evaluation Harness to benchmark models across six tasks: AI2 Reasoning Challenge, HellaSwag, MMLU, TruthfulQA, Winogrande, and GSM8k. These benchmarks test a range of reasoning and general knowledge skills. Detailed numerical results and model details are available at Hugging Face.

MTEB Leaderboard

Text embeddings are often evaluated on limited datasets from a single task without considering their applicability to other tasks such as clustering and re-ranking. This lack of comprehensive evaluations impedes tracking progress in the field. The Massive Text Embedding Benchmark (MTEB) addresses this issue by covering eight embedding tasks across 58 datasets and 112 languages. Benchmarking 33 models, MTEB provides the most extensive evaluation of text embeddings. Findings reveal that no single text embedding method excels across all tasks, indicating the need for further development towards a universal text embedding method.

BigCode Models Leaderboard

🤗 Inspired by the Open LLM Leaderboard, this leaderboard compares multilingual code generation models on the HumanEval and MultiPL-E benchmarks. HumanEval measures feature accuracy on 164 Python problems, while MultiPL-E translates these problems into 18 languages. Additionally, throughput is measured at batch sizes of 1 and 50. The evaluation uses the original benchmark prompts, prompts specific to the base and imperative models, and different evaluation parameters. Rankings are determined by average pass@1 score and win rate across languages, and memory usage is evaluated by Optimum-Benchmark.

SEAL Leaderboard

The SEAL leaderboard uses Elo-scale rankings to compare model performance across datasets. Human evaluators evaluate models’ responses to prompts and their evaluation determines which models are winners, losers, or ties. The Bradley-Terry model is used for maximum likelihood estimation of BT coefficients, minimizing binary cross-entropy loss. Rankings are based on average scores and win rates across multiple languages, with bootstrapping applied to estimate confidence intervals. This methodology ensures comprehensive and reliable model performance evaluation. Leading models are queried through various APIs to provide up-to-date and relevant comparisons.

Berkeley Function Call Leaderboard

The Berkeley Function-Calling Leaderboard (BFCL) evaluates LLMs on their ability to call functions and tools, a key feature for powering applications such as Langchain and AutoGPT. BFCL features a diverse dataset with 2,000 question-function-answer pairs across multiple languages and scenarios, ranging from simple to complex. Parallel function invocation measures model performance in function relevance detection, execution, and accuracy, with detailed metrics on cost and latency. Current leaders include GPT-4, OpenFunctions-v2, and Mistral-medium. The leaderboard provides insight into model strengths and common errors, helping you improve your function invocation capabilities.

Occiglot Euro LLM Leaderboard

This is a copy of Hugging Face’s Open LLM Leaderboard, expanding on the translated benchmarks. With so many LLMs and chatbots coming out every week, it can be hard to sift true progress from the hype. The Occiglot Euro LLM Leaderboard uses a fork of the Eleuther AI-Language Model Evaluation Harness to evaluate models on five benchmarks: AI2 Reasoning Challenge, HellaSwag, MMLU, TruthfulQA, and Belebele. These benchmarks test model performance on a range of tasks and languages. Detailed results and model details are available on Hugging Face. There are flagged models that require attention.

LMSYS Chatbot Arena Leaderboard

LMSYS Chatbot Arena is a crowdsourced open platform for evaluating LLMs. With over 1,000,000 human pairwise comparisons, models are ranked using the Bradley-Terry model and displayed on an Elo scale. The leaderboard contains 102 models and 1,149,962 votes as of May 27, 2024. New leaderboard categories such as coding and long user queries are available in preview. Users can vote at chat.lmsys.org. Model ranking takes into account statistical confidence intervals and a detailed methodology is described in the paper.

Artificial Analysis LLM Performance Leaderboard

Artificial Analysis benchmarks LLMs of serverless API endpoints to measure quality and performance from a customer perspective. Serverless endpoints are priced per token, with different prices for input and output tokens. Performance benchmarks include time to first token (TTFT), throughput (tokens per second), and total response time for 100 output tokens. Quality is assessed using a weighted average of normalized scores from MMLU, MT-Bench, and Chatbot Arena Elo Score. Tests are conducted daily across a range of prompt lengths and load scenarios. Results reflect real customer experience across proprietary and open weighting models.

Open Medical LLM Leaderboard

The Open Medical LLM Leaderboard tracks, ranks and evaluates LLMs on medical question answering tasks. It evaluates models using a variety of medical datasets, including MedQA (USMLE), PubMedQA, MedMCQA and MMLU subsets related to medicine and biology. These datasets cover medical aspects such as clinical knowledge, anatomy and genetics and feature multiple choice and open-ended questions that require medical reasoning.

The main evaluation metric is Accuracy (ACC). Models can be submitted for automatic evaluation via the “Submit” page. The leaderboard uses the Eleuther AI-Language Model Evaluation Harness. GPT-4 and Med-PaLM-2 results are from the official paper, with Med-PaLM-2 using 5-shot accuracy for comparison. Gemini results are from the recent Clinical-NLP (NAACL 24) paper. For more information about the dataset and technical information, see the leaderboard’s “About” page and discussion forum.

Hughes Hallucinogenic Evaluation Model (HHEM) Leaderboard

The Hughes Hallucination Evaluation Model (HHEM) leaderboard evaluates the frequency of hallucinations in document summaries generated by LLMs. A hallucination is an instance where the model introduces factually incorrect or irrelevant content into a summary. The leaderboard uses Vectara’s HHEM to assign a hallucination score between 0 and 1 based on 1006 documents from datasets such as the CNN/Daily Mail Corpus. Metrics include hallucination rate (percentage of summaries with a score below 0.5), factual consistency rate, answer rate (non-empty summaries), and average summary length. Models not hosted on Hugging Face, such as GPT variants, can be evaluated and uploaded by the HHEM team.

OpenVLM Leaderboard

The platform presents evaluation results of 63 vision language models (VLMs) using the open-source framework VLMEvalKit. The leaderboard, covering 23 multimodal benchmarks, includes models such as GPT-4v, Gemini, QwenVLPlus, and LLaVA, and is updated as of May 27, 2024.

Metrics:

- Average score: Average score across all VLM benchmarks (normalized from 0 to 100, higher is better).

- Average rank: Average rank across all VLM benchmarks (lower is better).

The main results are based on eight benchmarks: MMBench_V11, MMStar, MMMU_VAL, MathVista, OCRBench, AI2D, HallusionBench, and MMVet. The subsequent tabs provide detailed evaluation results for each dataset.

🤗LLM-Perf Leaderboard🏋️

🤗 The LLM-Perf leaderboard uses Optimum-Benchmark to benchmark the latency, throughput, memory and energy consumption of LLM across different hardware, backends and optimizations. Community members can request evaluation of new base models through the 🤗 Open LLM leaderboard, or request evaluation of hardware/backend/optimization configurations through the 🤗 LLM-Perf leaderboard or Optimum-Benchmark repository.

The evaluation uses a single GPU to ensure consistency, and LLM is run in a singleton batch with a prompt of 256 tokens to generate 64 tokens for at least 10 iterations and 10 seconds. Energy consumption is measured in kWh using CodeCarbon, and memory metrics include maximum allocated memory, maximum reserved memory, and maximum used memory. All benchmarks are run using the benchmark_cuda_pytorch.py script to ensure reproducibility.

![]()

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His latest endeavor is the launch of Marktechpost, an Artificial Intelligence media platform. The platform stands out for its in-depth coverage of Machine Learning and Deep Learning news in a manner that is technically accurate yet easily understandable to a wide audience. The platform has gained popularity among its audience with over 2 million views every month.

🐝 Join the fastest growing AI research newsletter, read by researchers from Google + NVIDIA + Meta + Stanford + MIT + Microsoft & more…