In 2022, before ChatGPT completely revolutionizes the world of artificial intelligence, Etched has decided to invest heavily in Transformer.

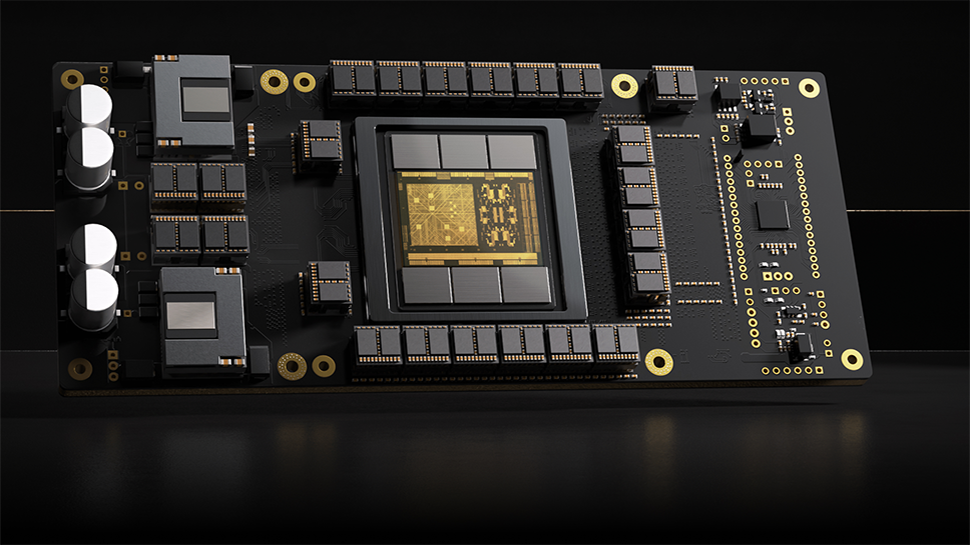

With this focus, the startup developed Sohu, a specialized ASIC chip designed specifically for Transformer models, and this architecture is used in engines such as ChatGPT, Sora, and Gemini.

Sohu’s one trick is that it can’t run machine learning models such as convolutional neural networks (CNNs), recurrent neural networks (RNNs) or long short-term memory networks (LSTMs), but it boasts unparalleled performance on Transformers, beating Nvidia’s flagship GPU, the B200, by about 10 times, Etched said.

Scalability is key

Because Sohu is designed specifically for Transformer models, it avoids the complex and often unnecessary control flow logic that general-purpose GPUs must handle to support a wide variety of applications.

By focusing solely on the computational needs of the Transformer, Sohu is able to allocate more resources to performing mathematical operations, which are the core task of Transformer processing.

This streamlined approach enables Sohu to achieve over 90% utilization of its FLOPS capacity, significantly higher than the roughly 30% utilization of general-purpose GPUs. This means that Sohu can perform many more calculations in a given period of time, making Transformer-based tasks much more efficient.

The use of Transformer models has increased dramatically around the world, with every major AI lab from Google to Microsoft working to further scale the technology. With performance exceeding 500,000 tokens per second on Llama 70B throughput, Sohu is orders of magnitude faster and more cost-effective than next-generation GPUs.

Etched believes the move to purpose-built chips is inevitable, and it aims to stay ahead of the curve. “The current and next-generation cutting edge model is Transformer,” the company says. “Tomorrow’s hardware stack will be optimized for Transformer. Nvidia’s GB200 has special support for Transformer (TransformerEngine). With ASICs like Sohu entering the market, there’s been a point of no return.”

Etched reports that Sohu is scaling up production and already has significant orders in. “We believe in a hardware lottery: the winner is the model that can run fastest and cheapest on our hardware. Transformer is so powerful, useful, and profitable that it will dominate all major AI computing markets before alternatives are ready.”

The company adds, “Transformer Killer will need to run on the GPU faster than Transformer runs on Sohu, and if that happens, we’ll build an ASIC for it as well.”