Most AI CEOs respond that being too optimistic might make the glass explode. Elon Musk believes there’s a good chance that AI will destroy humanity, which is why his company, X.ai, is doing all it can to prevent it. OpenAI president Sam Altman believes artificial intelligence can solve some of the planet’s toughest problems, but at the same time, it’s creating enough new ones that he’s proposed vetting the best AI models with an equivalent of UN weapons inspectors.

Unfortunately, all of this is bogged down in the most silly of places. Acknowledging that AI is equal parts promise and danger, and that the only way out of the Möbius strip is to keep building it until good eventually wins or doesn’t, is Wayne LaPierre’s domain. The former president of the National Rifle Association has incorporated liability avoidance into his sales pitch, declaring that “the only way to stop a bad guy with a gun is to stop a good guy with a gun.” I don’t think the AI giants are as bad as LaPierre, and there are national security arguments for why they should stay ahead of China. But are we really going to lumber into an arms race that enriches AI makers while absolving them of responsibility? Isn’t that too American, even for the United States?

These concerns wouldn’t be as big if the first big AI crisis were a hypothetical event in the distant future, but there is a day circled on the calendar: November 5, 2024. Election Day.

For over a year, FBI Director Christopher A. Wray has been warning about a wave of election interference that could make 2016 look cute. In 2024, no sane foreign adversary needs an army of human trolls. AI can churn out literally billions of pieces of realistic-looking and -sounding misinformation about when, where, and how to vote. It can also easily customize political ads for individual targets. In 2016, Donald Trump’s digital campaign manager Brad Parscale spent countless hours customizing small thumbnail election ads for groups of 20 to 50 people on Facebook. It was a miserable job, but an incredibly effective way to make people feel noticed by the campaign. In 2024, Brad Parscale is software, and any chaos agent can be harnessed for a fraction of the cost. There are increasing legal restrictions on advertising, but AI can create fake social profiles and target you squarely on your individual feeds. Deepfakes of candidates have been around for months, and AI companies continue to release tools that make all this material faster and more convincing.

About 80% of Americans believe some form of AI misuse is likely to affect the outcome of the November presidential election. Director Wray has placed at least two election crimes coordinators in each of the FBI’s 56 field offices. He has urged people to look more carefully at media sources. In public, he is a symbol of calm. “Americans can and should have confidence in our electoral system,” he said at an international cybersecurity conference in January. Privately, an elected official familiar with Director Wray’s thinking told me that the director faces the paradox of a middle manager: heavy responsibility but limited power.[Wray] “The director continues to raise issues, but he doesn’t play politics and he doesn’t make policy,” the official said. “The FBI enforces the law. He says, ‘Ask Congress where the law is.'”

Is this becoming one of those angry rallying cries against Congress? Well, in a way it is, because the Senate has spent a year blabbing about the need to balance speed with thoroughness when regulating AI, and then failed to deliver on either. But stick with me for the punch line.

On May 15, the Senate released a 31-page AI roadmap that immediately invited friendly fire. “The lack of vision is a blow,” declared Alondra Nelson, former acting director of the White House Office of Science and Technology Policy under President Biden. The roadmap contains nothing to compel AI makers to step up or to help Wray: there are no content verification standards, no mandates for watermarking AI content, let alone digital privacy laws that would criminalize deep fakes of voices or likenesses. If the AI industry thinks it should be both arsonists and firefighters, Congress seems happy to provide them with matches and water. But we can at least get a taste of how Sen. Todd Young (R-Indiana), a self-described member of the Senate’s AI gang, summarized the abdication of responsibility: “If ambiguity is necessary to reach an agreement, we embrace ambiguity.” Dalio Amodei, you’re free to go.

You know the Spider-Man meme? The one where two Spider-Men point at each other in confusion, accuse the other of being a fake, and the criminal runs away. This version is like 2.5 times more powerful. Because while AI companies shrug and Congress praises ambiguity, it’s social media companies that are spreading the majority of the misinformation. Social media isn’t actually that terrible in comparison.

No, it really was. It was Meta CEO Mark Zuckerberg who initially denied the impact of Russian misinformation on the 2016 election, calling it “pretty crazy.” But a year later, Zuckerberg admitted he was wrong and launched what security and law enforcement officials at Meta and other platforms call a kind of “glasnost.” Both sides recognized the risk of failure and found ways to work together, often using their own AI software to detect anomalies in posting patterns. Then they shared their findings and weeded out bad content before it spread. The 2020 and 2022 elections were more than just proofs of concept. They were successes.

However, all these collaborations took place before the AI boom and ended in July last year. Murthy v. MissouriA lawsuit filed by Republican attorneys general of Louisiana and Missouri argued that federal interactions with social media platforms over removing misinformation constituted “censorship” and violated the First Amendment. Was the lawsuit an act of political retaliation motivated by a misconception that social media leans left-leaning? Yes. But it doesn’t have to be partisan to imagine how interactions between social media platforms and, say, a president with narcissistic personality disorder, might turn coercive. U.S. District Judge Terry Doughty sided with the plaintiffs and issued a preliminary injunction, which the Fifth Circuit Court of Appeals upheld.

In March, Sen. Mark R. Warner (D-VA), chairman of the Senate Intelligence Committee, made clear the consequences of the decision: eight months of complete silence between the federal government and social media companies about misinformation. “This should terrify us all,” Warner said. The Supreme Court is due to rule on the case. Murthy v. Missouri A ruling is expected next month, but there are preliminary signs that the justices are at least skeptical of the lower court’s decision.

Whatever the court’s decision, most of the social media executives I’ve spoken to are intoxicated with their own sense of righteousness. Only this time they were caught doing the right thing! It’s not their fault the court intervened! They remind me of my teenage years when the neighbor’s son got in trouble. And they conveniently forget. Misinformation is not just the existence of lies, it’s the erosion of reliable facts. And Meta, Google, X and others have led the attack to undermine journalism. First they killed its business model, then under the ruthless banner of “content”, they equated news with makeup tutorials and ASMR videos, and finally removed it from the feed altogether. Maybe Spiderman is half as generous.

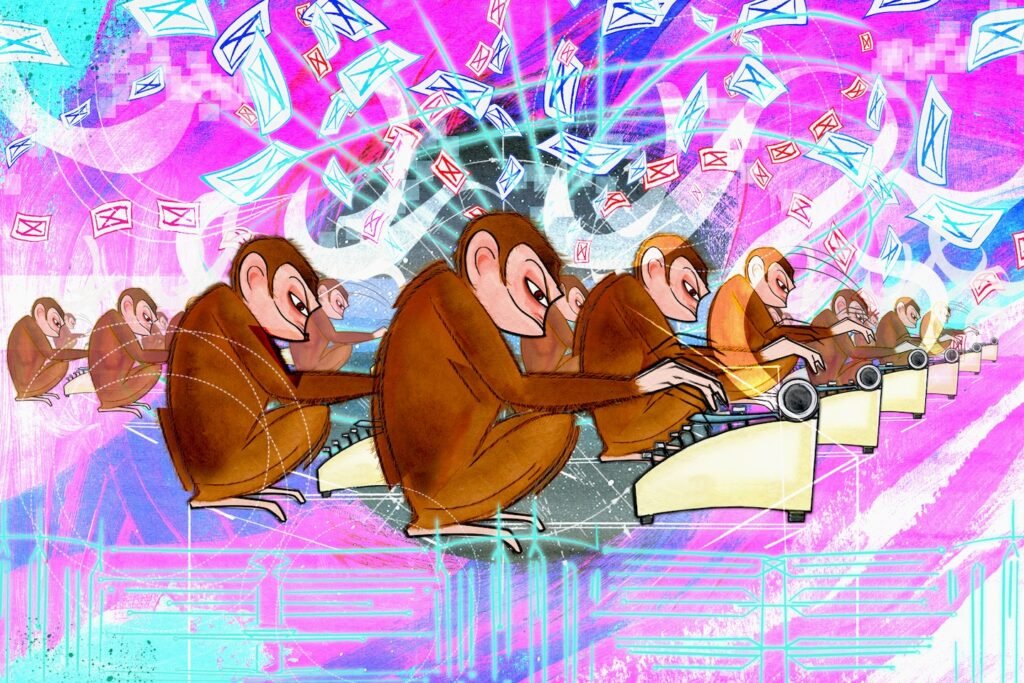

We don’t know what November will be like. The obvious answer is that it won’t be great. It’s unlikely to be a single, imaginative, “War of the Worlds”-style deception, such as a presidential candidate being put in a compromising position or a terrorist attack being threatened, and it will at least have the advantage of being more visible and easier to expose. What I fear more is a million AI monkeys wreaking low-level mayhem with a million AI typewriters, especially in local elections. Rural counties are plagued with bare bones staffing and are often in the middle of news deserts. They’re the perfect breeding dish for information viruses that might go unnoticed. Some states are training election officials and poll workers to spot deep fakes, but there’s no chance that all of the country’s 100,000+ polling places will be prepared, especially when technology advances so quickly that we’re not sure what to prepare for.

The best way to clean up a mess is to not make it in the first place. This is the virtue of responsible adults and, until recently, a functioning democracy. The Supreme Court may overturn this. MurthyPerhaps AI companies will voluntarily commit to increased surveillance and delay the release of new AI until 2025. Maybe the FBI’s Wray can sway Congress. But if neither of these possibilities come to fruition, we’ll have to prepare for Election Day in LaPierre Land. Prayers, everyone.